권철민 강사님의 '파이썬 머신러닝 완벽 가이드'을 학습하고 정리한 것입니다. 배우는 중이라 잘못된 내용이 있을 수 있으며 계속해서 보완해 나갈 것입니다. :))

3.XGBoost(eXtra Gradient Boost) XGBoost는 앞선 GBM의 단점을 보충하면서도 여러 강점들을 가지고 있다.

XGBoost의 주요 장점)

- 분류 뿐만 아니라 회귀 에서도 뛰어난 예측 성능

- CPU 병렬처리, GPU 지원 등으로 GBM 대비 빠른 수행시간을 가짐

- Regularization 기능, Tree Pruninng등 다양항 성능 향상 기능 지원

- 다양한 편의 기능 (Early Stopping, 자체 내장된 교차 검증 , 결손값 자체 처리)

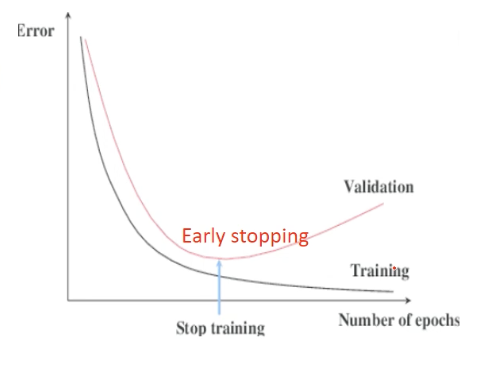

※ XGBoost 의 Early Stopping 기능

XGBoost와 LightGBM은 early stopping기능이 있다.

early stopping은 지정해준 n_estimate 수만큼 반복하지 않더라도 일정 횟수에서 더이상 cost function 값(예측 오류)가 감소하지 않으면

중단시켜버리는 것을 말한다.

sklearn Wrapper XGBoost의 early stopping 사용 및 parameter)

.fit(X, y, sample_weight=None, base_margin=None, eval_set=None,

eval_metric=None, early_stopping_rounds=None, verbose=True, xgb_model=None,

sample_weight_eval_set=None, feature_weights=None, callbacks=None)¶

- early_stopping_rounds: 더 이상 비용 평가 지표가 감소하지 않는 최대 반복횟수

- eval_metric: 반복 수행 시 사용하는 비용 평가 지표

- eval_set: 평가를 수행하는 별도의 검증 데이터 세트. 일반적으로 검증 데이터 세트에서 반복적으로 비용감소 성능 평가

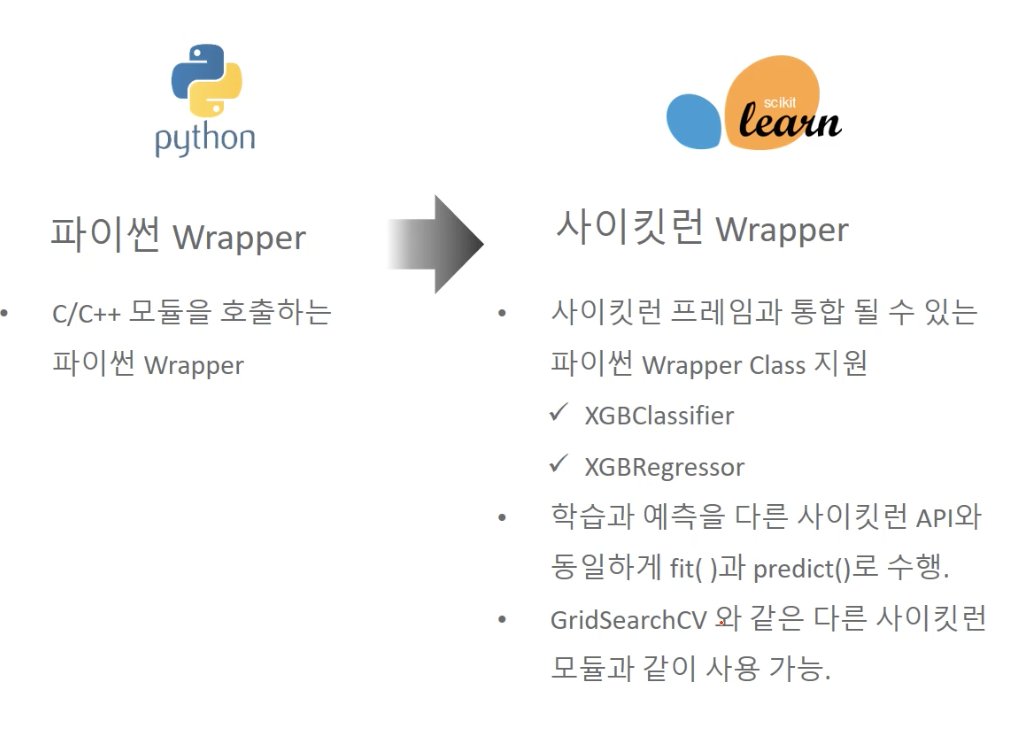

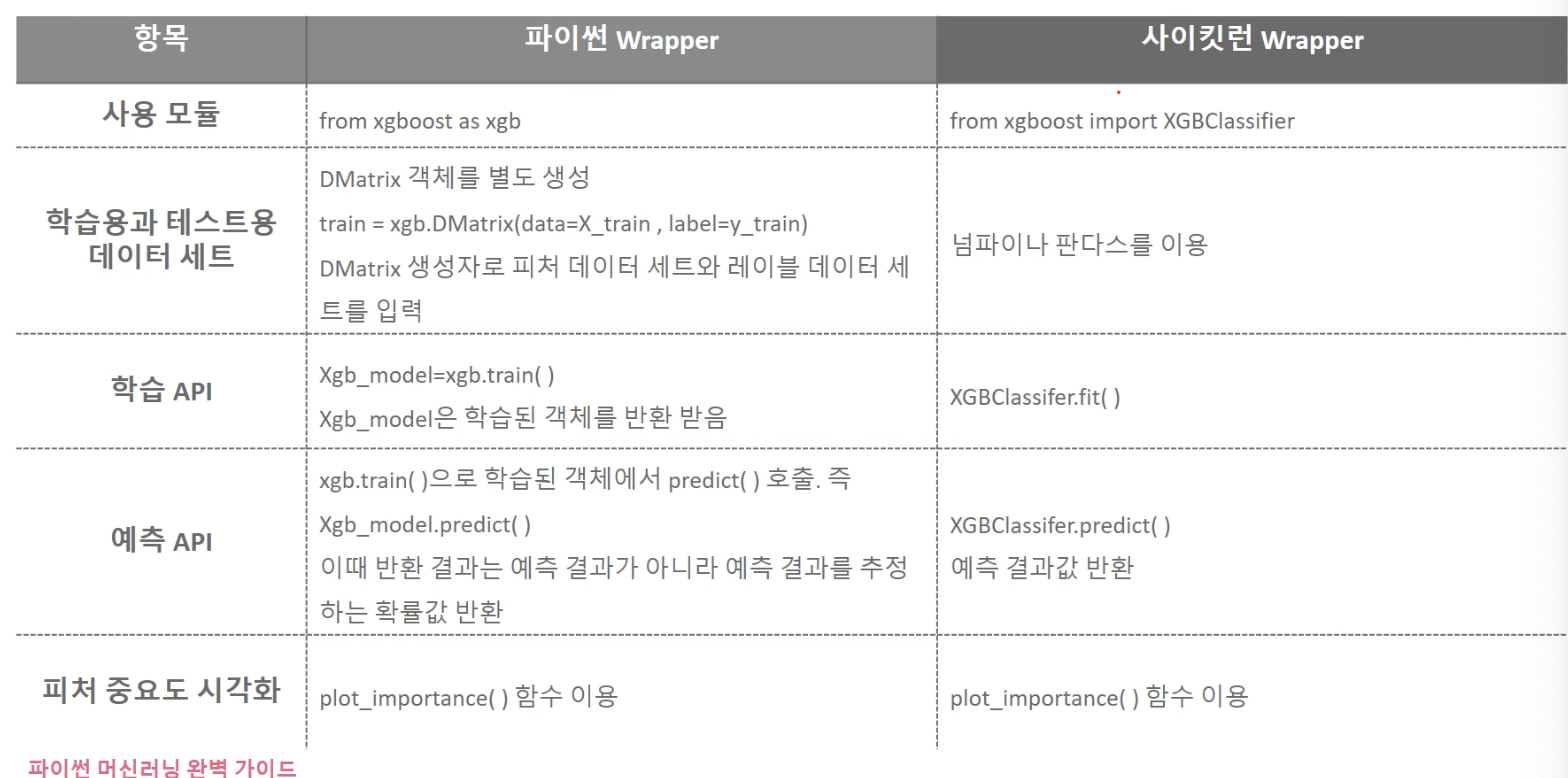

XGBoost의 사용) python Wrapper와 Sklearn Wrapper

xgboost.readthedocs.io/en/latest/python/python_api.html#module-xgboost.sklearn

www.kaggle.com/stuarthallows/using-xgboost-with-scikit-learn

Using XGBoost with Scikit-learn

Explore and run machine learning code with Kaggle Notebooks | Using data from no data sources

www.kaggle.com

python Wrapper XGBoost, sklearn Wrapper XGBoost )

blog.naver.com/PostView.nhn?blogId=gustn3964&logNo=221431714122&from=search&redirect=Log&widgetTypeCall=true&directAccess=false

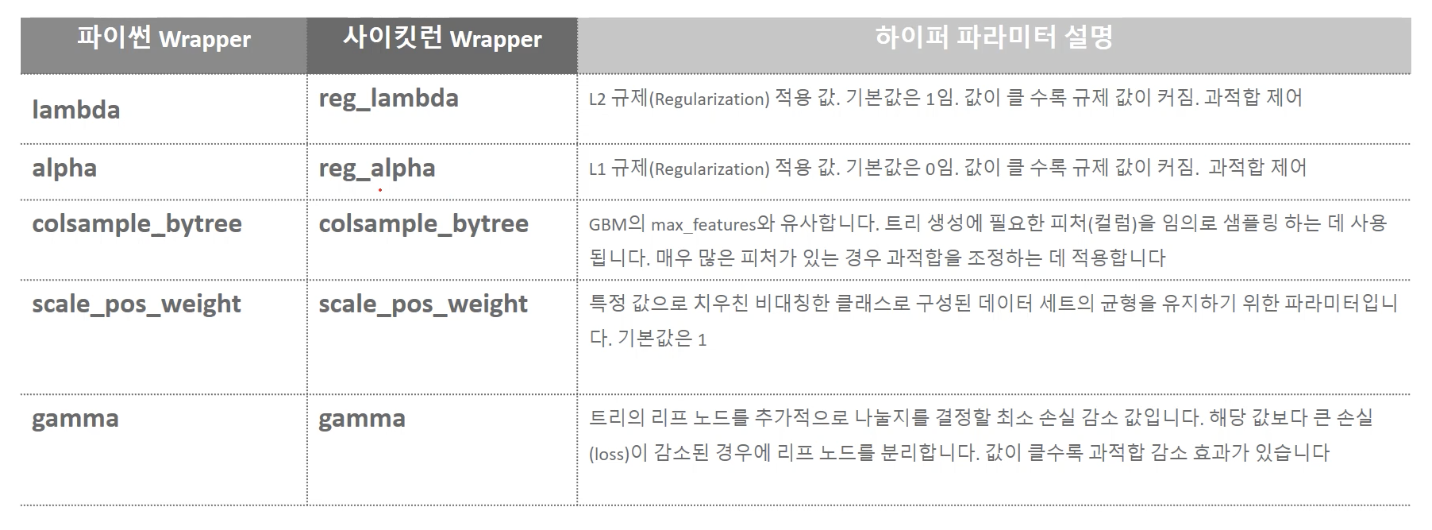

XGBoost은 hyper parameter가 너무 많다. 그래서 hyper parameter 튜닝이 너무 어려울텐데, 다행이도 뛰어난 알고리즘(이 알고리즘ㅎㅎ)일 수록 파라미터를 튜닝할 필요가 적고, 파라미터 튜닝에 들이는 수고 대비 성능 향상 효과가 높지 않다. 그래서, <파이썬 머신러닝 완벽 가이드>에서는 overfitting 문제가 너무 심할 때 다음 5가지만 적용해보기를 추천하고 있다.

- eta(learning_rate) 값을 낮춘다. (0.01~0.1) 그리고 eta(learning_rate)값을 낮추는 경우 num_boost_rounds(n_estimators) 는 반대로 높여준다.

- max_depth 값을 낮춘다.

- min_child_weight 값을 높인다.

- gamma 값을 높인다.

- sub_sample(subsample), colsample_bytree 를 조정해서 트리가 복잡해지는 것을 막는다.

sklearn Wrapper XGBoost 코드)

python Wrapper가 아닌 sklearn Wrapper를 사용하고, early-stopping기능을 활용하여 앞에서 계속 해온 위스콘신 유방암 데이터 분석을 해보도록 한다.

※ early stopping 에 관련된 parameter는

- early_stopping_rounds: 더 이상 비용 평가 지표가 감소하지 않는 최대 반복횟수

- eval_metric: 반복 수행 시 사용하는 비용 평가 지표

- eval_set: 평가를 수행하는 별도의 검증 데이터 세트. 일반적으로 검증 데이터 세트에서 반복적으로 비용감소 성능 평가

였다. 그래서 이 early_stopping_rounds 값을 어떻게 적절하게 줄 수 있을 지도 고민해봐야 하는 사항이긴 하다.

Out[2]:

mean radius

mean texture

mean perimeter

mean area

mean smoothness

mean compactness

mean concavity

mean concave points

mean symmetry

mean fractal dimension

...

worst radius

worst texture

worst perimeter

worst area

worst smoothness

worst compactness

worst concavity

worst concave points

worst symmetry

worst fractal dimension

0

17.99

10.38

122.80

1001.0

0.11840

0.27760

0.3001

0.14710

0.2419

0.07871

...

25.38

17.33

184.60

2019.0

0.1622

0.6656

0.7119

0.2654

0.4601

0.11890

1

20.57

17.77

132.90

1326.0

0.08474

0.07864

0.0869

0.07017

0.1812

0.05667

...

24.99

23.41

158.80

1956.0

0.1238

0.1866

0.2416

0.1860

0.2750

0.08902

2

19.69

21.25

130.00

1203.0

0.10960

0.15990

0.1974

0.12790

0.2069

0.05999

...

23.57

25.53

152.50

1709.0

0.1444

0.4245

0.4504

0.2430

0.3613

0.08758

3

11.42

20.38

77.58

386.1

0.14250

0.28390

0.2414

0.10520

0.2597

0.09744

...

14.91

26.50

98.87

567.7

0.2098

0.8663

0.6869

0.2575

0.6638

0.17300

4

20.29

14.34

135.10

1297.0

0.10030

0.13280

0.1980

0.10430

0.1809

0.05883

...

22.54

16.67

152.20

1575.0

0.1374

0.2050

0.4000

0.1625

0.2364

0.07678

5 rows × 30 columns

[0] validation_0-logloss:0.61074

Will train until validation_0-logloss hasn't improved in 100 rounds.

[1] validation_0-logloss:0.54330

[2] validation_0-logloss:0.48703

[3] validation_0-logloss:0.43807

[4] validation_0-logloss:0.39739

[5] validation_0-logloss:0.36164

[6] validation_0-logloss:0.33155

[7] validation_0-logloss:0.30455

[8] validation_0-logloss:0.28063

[9] validation_0-logloss:0.25836

[10] validation_0-logloss:0.23880

[11] validation_0-logloss:0.21861

[12] validation_0-logloss:0.20191

[13] validation_0-logloss:0.18760

[14] validation_0-logloss:0.17434

[15] validation_0-logloss:0.16118

[16] validation_0-logloss:0.14883

[17] validation_0-logloss:0.13820

[18] validation_0-logloss:0.13136

[19] validation_0-logloss:0.12219

[20] validation_0-logloss:0.11408

[21] validation_0-logloss:0.10782

[22] validation_0-logloss:0.10078

[23] validation_0-logloss:0.09542

[24] validation_0-logloss:0.09040

[25] validation_0-logloss:0.08715

[26] validation_0-logloss:0.08375

[27] validation_0-logloss:0.07934

[28] validation_0-logloss:0.07633

[29] validation_0-logloss:0.07368

[30] validation_0-logloss:0.07056

[31] validation_0-logloss:0.06706

[32] validation_0-logloss:0.06486

[33] validation_0-logloss:0.06255

[34] validation_0-logloss:0.06007

[35] validation_0-logloss:0.05809

[36] validation_0-logloss:0.05577

[37] validation_0-logloss:0.05402

[38] validation_0-logloss:0.05296

[39] validation_0-logloss:0.05194

[40] validation_0-logloss:0.05024

[41] validation_0-logloss:0.04879

[42] validation_0-logloss:0.04721

[43] validation_0-logloss:0.04607

[44] validation_0-logloss:0.04505

[45] validation_0-logloss:0.04387

[46] validation_0-logloss:0.04328

[47] validation_0-logloss:0.04204

[48] validation_0-logloss:0.04086

[49] validation_0-logloss:0.03995

[50] validation_0-logloss:0.03946

[51] validation_0-logloss:0.03838

[52] validation_0-logloss:0.03745

[53] validation_0-logloss:0.03716

[54] validation_0-logloss:0.03687

[55] validation_0-logloss:0.03652

[56] validation_0-logloss:0.03558

[57] validation_0-logloss:0.03491

[58] validation_0-logloss:0.03406

[59] validation_0-logloss:0.03326

[60] validation_0-logloss:0.03269

[61] validation_0-logloss:0.03256

[62] validation_0-logloss:0.03221

[63] validation_0-logloss:0.03173

[64] validation_0-logloss:0.03135

[65] validation_0-logloss:0.03059

[66] validation_0-logloss:0.03009

[67] validation_0-logloss:0.02941

[68] validation_0-logloss:0.02865

[69] validation_0-logloss:0.02839

[70] validation_0-logloss:0.02807

[71] validation_0-logloss:0.02755

[72] validation_0-logloss:0.02716

[73] validation_0-logloss:0.02710

[74] validation_0-logloss:0.02690

[75] validation_0-logloss:0.02673

[76] validation_0-logloss:0.02636

[77] validation_0-logloss:0.02565

[78] validation_0-logloss:0.02537

[79] validation_0-logloss:0.02516

[80] validation_0-logloss:0.02508

[81] validation_0-logloss:0.02474

[82] validation_0-logloss:0.02471

[83] validation_0-logloss:0.02433

[84] validation_0-logloss:0.02440

[85] validation_0-logloss:0.02409

[86] validation_0-logloss:0.02381

[87] validation_0-logloss:0.02349

[88] validation_0-logloss:0.02322

[89] validation_0-logloss:0.02299

[90] validation_0-logloss:0.02274

[91] validation_0-logloss:0.02260

[92] validation_0-logloss:0.02257

[93] validation_0-logloss:0.02236

[94] validation_0-logloss:0.02191

[95] validation_0-logloss:0.02180

[96] validation_0-logloss:0.02161

[97] validation_0-logloss:0.02150

[98] validation_0-logloss:0.02149

[99] validation_0-logloss:0.02131

[100] validation_0-logloss:0.02119

[101] validation_0-logloss:0.02099

[102] validation_0-logloss:0.02089

[103] validation_0-logloss:0.02078

[104] validation_0-logloss:0.02065

[105] validation_0-logloss:0.02064

[106] validation_0-logloss:0.02037

[107] validation_0-logloss:0.02040

[108] validation_0-logloss:0.02031

[109] validation_0-logloss:0.02015

[110] validation_0-logloss:0.01997

[111] validation_0-logloss:0.01983

[112] validation_0-logloss:0.01974

[113] validation_0-logloss:0.01957

[114] validation_0-logloss:0.01943

[115] validation_0-logloss:0.01934

[116] validation_0-logloss:0.01910

[117] validation_0-logloss:0.01888

[118] validation_0-logloss:0.01892

[119] validation_0-logloss:0.01893

[120] validation_0-logloss:0.01871

[121] validation_0-logloss:0.01871

[122] validation_0-logloss:0.01855

[123] validation_0-logloss:0.01848

[124] validation_0-logloss:0.01823

[125] validation_0-logloss:0.01816

[126] validation_0-logloss:0.01807

[127] validation_0-logloss:0.01788

[128] validation_0-logloss:0.01793

[129] validation_0-logloss:0.01784

[130] validation_0-logloss:0.01782

[131] validation_0-logloss:0.01763

[132] validation_0-logloss:0.01762

[133] validation_0-logloss:0.01764

[134] validation_0-logloss:0.01740

[135] validation_0-logloss:0.01732

[136] validation_0-logloss:0.01723

[137] validation_0-logloss:0.01720

[138] validation_0-logloss:0.01714

[139] validation_0-logloss:0.01702

[140] validation_0-logloss:0.01693

[141] validation_0-logloss:0.01695

[142] validation_0-logloss:0.01694

[143] validation_0-logloss:0.01683

[144] validation_0-logloss:0.01671

[145] validation_0-logloss:0.01669

[146] validation_0-logloss:0.01665

[147] validation_0-logloss:0.01655

[148] validation_0-logloss:0.01644

[149] validation_0-logloss:0.01640

[150] validation_0-logloss:0.01632

[151] validation_0-logloss:0.01625

[152] validation_0-logloss:0.01629

[153] validation_0-logloss:0.01634

[154] validation_0-logloss:0.01633

[155] validation_0-logloss:0.01624

[156] validation_0-logloss:0.01614

[157] validation_0-logloss:0.01596

[158] validation_0-logloss:0.01598

[159] validation_0-logloss:0.01590

[160] validation_0-logloss:0.01591

[161] validation_0-logloss:0.01583

[162] validation_0-logloss:0.01593

[163] validation_0-logloss:0.01586

[164] validation_0-logloss:0.01572

[165] validation_0-logloss:0.01564

[166] validation_0-logloss:0.01554

[167] validation_0-logloss:0.01551

[168] validation_0-logloss:0.01540

[169] validation_0-logloss:0.01533

[170] validation_0-logloss:0.01529

[171] validation_0-logloss:0.01531

[172] validation_0-logloss:0.01522

[173] validation_0-logloss:0.01512

[174] validation_0-logloss:0.01519

[175] validation_0-logloss:0.01502

[176] validation_0-logloss:0.01504

[177] validation_0-logloss:0.01497

[178] validation_0-logloss:0.01485

[179] validation_0-logloss:0.01487

[180] validation_0-logloss:0.01479

[181] validation_0-logloss:0.01487

[182] validation_0-logloss:0.01493

[183] validation_0-logloss:0.01484

[184] validation_0-logloss:0.01478

[185] validation_0-logloss:0.01479

[186] validation_0-logloss:0.01487

[187] validation_0-logloss:0.01480

[188] validation_0-logloss:0.01468

[189] validation_0-logloss:0.01474

[190] validation_0-logloss:0.01475

[191] validation_0-logloss:0.01469

[192] validation_0-logloss:0.01477

[193] validation_0-logloss:0.01468

[194] validation_0-logloss:0.01463

[195] validation_0-logloss:0.01457

[196] validation_0-logloss:0.01447

[197] validation_0-logloss:0.01446

[198] validation_0-logloss:0.01453

[199] validation_0-logloss:0.01459

[200] validation_0-logloss:0.01452

[201] validation_0-logloss:0.01448

[202] validation_0-logloss:0.01440

[203] validation_0-logloss:0.01447

[204] validation_0-logloss:0.01446

[205] validation_0-logloss:0.01440

[206] validation_0-logloss:0.01434

[207] validation_0-logloss:0.01435

[208] validation_0-logloss:0.01434

[209] validation_0-logloss:0.01427

[210] validation_0-logloss:0.01434

[211] validation_0-logloss:0.01433

[212] validation_0-logloss:0.01427

[213] validation_0-logloss:0.01419

[214] validation_0-logloss:0.01415

[215] validation_0-logloss:0.01422

[216] validation_0-logloss:0.01421

[217] validation_0-logloss:0.01414

[218] validation_0-logloss:0.01415

[219] validation_0-logloss:0.01414

[220] validation_0-logloss:0.01408

[221] validation_0-logloss:0.01413

[222] validation_0-logloss:0.01410

[223] validation_0-logloss:0.01409

[224] validation_0-logloss:0.01409

[225] validation_0-logloss:0.01411

[226] validation_0-logloss:0.01405

[227] validation_0-logloss:0.01401

[228] validation_0-logloss:0.01398

[229] validation_0-logloss:0.01400

[230] validation_0-logloss:0.01394

[231] validation_0-logloss:0.01394

[232] validation_0-logloss:0.01393

[233] validation_0-logloss:0.01388

[234] validation_0-logloss:0.01385

[235] validation_0-logloss:0.01386

[236] validation_0-logloss:0.01377

[237] validation_0-logloss:0.01373

[238] validation_0-logloss:0.01370

[239] validation_0-logloss:0.01375

[240] validation_0-logloss:0.01370

[241] validation_0-logloss:0.01366

[242] validation_0-logloss:0.01363

[243] validation_0-logloss:0.01368

[244] validation_0-logloss:0.01367

[245] validation_0-logloss:0.01373

[246] validation_0-logloss:0.01366

[247] validation_0-logloss:0.01362

[248] validation_0-logloss:0.01363

[249] validation_0-logloss:0.01360

[250] validation_0-logloss:0.01355

[251] validation_0-logloss:0.01357

[252] validation_0-logloss:0.01352

[253] validation_0-logloss:0.01352

[254] validation_0-logloss:0.01352

[255] validation_0-logloss:0.01347

[256] validation_0-logloss:0.01344

[257] validation_0-logloss:0.01340

[258] validation_0-logloss:0.01342

[259] validation_0-logloss:0.01341

[260] validation_0-logloss:0.01335

[261] validation_0-logloss:0.01330

[262] validation_0-logloss:0.01328

[263] validation_0-logloss:0.01333

[264] validation_0-logloss:0.01330

[265] validation_0-logloss:0.01327

[266] validation_0-logloss:0.01327

[267] validation_0-logloss:0.01327

[268] validation_0-logloss:0.01325

[269] validation_0-logloss:0.01327

[270] validation_0-logloss:0.01332

[271] validation_0-logloss:0.01328

[272] validation_0-logloss:0.01323

[273] validation_0-logloss:0.01320

[274] validation_0-logloss:0.01319

[275] validation_0-logloss:0.01321

[276] validation_0-logloss:0.01318

[277] validation_0-logloss:0.01313

[278] validation_0-logloss:0.01315

[279] validation_0-logloss:0.01312

[280] validation_0-logloss:0.01312

[281] validation_0-logloss:0.01313

[282] validation_0-logloss:0.01311

[283] validation_0-logloss:0.01307

[284] validation_0-logloss:0.01312

[285] validation_0-logloss:0.01310

[286] validation_0-logloss:0.01303

[287] validation_0-logloss:0.01309

[288] validation_0-logloss:0.01306

[289] validation_0-logloss:0.01301

[290] validation_0-logloss:0.01301

[291] validation_0-logloss:0.01298

[292] validation_0-logloss:0.01294

[293] validation_0-logloss:0.01292

[294] validation_0-logloss:0.01294

[295] validation_0-logloss:0.01292

[296] validation_0-logloss:0.01289

[297] validation_0-logloss:0.01294

[298] validation_0-logloss:0.01292

[299] validation_0-logloss:0.01286

[300] validation_0-logloss:0.01287

[301] validation_0-logloss:0.01287

[302] validation_0-logloss:0.01282

[303] validation_0-logloss:0.01279

[304] validation_0-logloss:0.01275

[305] validation_0-logloss:0.01273

[306] validation_0-logloss:0.01270

[307] validation_0-logloss:0.01275

[308] validation_0-logloss:0.01275

[309] validation_0-logloss:0.01271

[310] validation_0-logloss:0.01268

[311] validation_0-logloss:0.01270

[312] validation_0-logloss:0.01264

[313] validation_0-logloss:0.01261

[314] validation_0-logloss:0.01259

[315] validation_0-logloss:0.01257

[316] validation_0-logloss:0.01256

[317] validation_0-logloss:0.01252

[318] validation_0-logloss:0.01254

[319] validation_0-logloss:0.01252

[320] validation_0-logloss:0.01246

[321] validation_0-logloss:0.01251

[322] validation_0-logloss:0.01249

[323] validation_0-logloss:0.01245

[324] validation_0-logloss:0.01242

[325] validation_0-logloss:0.01241

[326] validation_0-logloss:0.01239

[327] validation_0-logloss:0.01235

[328] validation_0-logloss:0.01240

[329] validation_0-logloss:0.01237

[330] validation_0-logloss:0.01235

[331] validation_0-logloss:0.01232

[332] validation_0-logloss:0.01231

[333] validation_0-logloss:0.01227

[334] validation_0-logloss:0.01225

[335] validation_0-logloss:0.01227

[336] validation_0-logloss:0.01225

[337] validation_0-logloss:0.01224

[338] validation_0-logloss:0.01224

[339] validation_0-logloss:0.01228

[340] validation_0-logloss:0.01226

[341] validation_0-logloss:0.01222

[342] validation_0-logloss:0.01222

[343] validation_0-logloss:0.01222

[344] validation_0-logloss:0.01220

[345] validation_0-logloss:0.01222

[346] validation_0-logloss:0.01221

[347] validation_0-logloss:0.01219

[348] validation_0-logloss:0.01217

[349] validation_0-logloss:0.01221

[350] validation_0-logloss:0.01218

[351] validation_0-logloss:0.01214

[352] validation_0-logloss:0.01212

[353] validation_0-logloss:0.01209

[354] validation_0-logloss:0.01209

[355] validation_0-logloss:0.01207

[356] validation_0-logloss:0.01204

[357] validation_0-logloss:0.01204

[358] validation_0-logloss:0.01203

[359] validation_0-logloss:0.01200

[360] validation_0-logloss:0.01200

[361] validation_0-logloss:0.01199

[362] validation_0-logloss:0.01198

[363] validation_0-logloss:0.01200

[364] validation_0-logloss:0.01198

[365] validation_0-logloss:0.01198

[366] validation_0-logloss:0.01194

[367] validation_0-logloss:0.01193

[368] validation_0-logloss:0.01192

[369] validation_0-logloss:0.01192

[370] validation_0-logloss:0.01190

[371] validation_0-logloss:0.01192

[372] validation_0-logloss:0.01189

[373] validation_0-logloss:0.01187

[374] validation_0-logloss:0.01187

[375] validation_0-logloss:0.01186

[376] validation_0-logloss:0.01185

[377] validation_0-logloss:0.01182

[378] validation_0-logloss:0.01184

[379] validation_0-logloss:0.01182

[380] validation_0-logloss:0.01179

[381] validation_0-logloss:0.01177

[382] validation_0-logloss:0.01176

[383] validation_0-logloss:0.01176

[384] validation_0-logloss:0.01173

[385] validation_0-logloss:0.01172

[386] validation_0-logloss:0.01172

[387] validation_0-logloss:0.01171

[388] validation_0-logloss:0.01172

[389] validation_0-logloss:0.01170

[390] validation_0-logloss:0.01171

[391] validation_0-logloss:0.01168

[392] validation_0-logloss:0.01166

[393] validation_0-logloss:0.01164

[394] validation_0-logloss:0.01162

[395] validation_0-logloss:0.01162

[396] validation_0-logloss:0.01161

[397] validation_0-logloss:0.01161

[398] validation_0-logloss:0.01158

[399] validation_0-logloss:0.01155

오차 행렬

[[44 0]

[ 0 70]]

정확도: 1.0000, 정밀도: 1.0000, 재현율: 1.0000, F1: 1.0000, AUC:1.0000

Out[7]:

<AxesSubplot:title={'center':'Feature importance'}, xlabel='F score', ylabel='Features'>

흠.. 근데 내 코드에서는 왜 early stopping이 되지 않고, 결과도 정확도: 1.0000, 정밀도: 1.0000, 재현율: 1.0000, F1: 1.0000, AUC:1.0000 이렇게 나올까..? 좀 더 이것 저것을 해봐야겠다.

Reference 파이썬 머신러닝 완벽 가이드 - 권철민 저